Yunzhen Feng

Machine Learning Researcher

I'm a final year PhD candidate at Center for Data Science, New York University, under the supervision of Prof. Julia Kempe. My research spans synthetic data, reinforcement learning, and LLM reasoning, with a recent focus on the reliability and memory mechanisms of LLM agents.

At NYU, I have had the privilege of collaborating with Prof. He He, Prof. Tim G. J. Rudner, Prof. Qi Lei, and Prof. Yaqi Duan. My industry experience includes internships at Meta's Superintelligence Labs (MSL) in 2025, where I was advised by Anthony Hartshorn, Cheng Zhang, and Parag Jain, and at Meta's Fundamental AI Research (FAIR) team in 2024, advised by Yann Ollivier and François Charton. In 2022, I interned at Uber, focusing on causal machine learning for marketplace optimization with Jason Dowlatabadi, Chen Xu, and Prof. Stefan Wager, Prof. Peter Frazier.

I graduated from Peking University with a bachelor's in Applied Mathematics (Honor Track). Back then, I was advised by Prof. Bin Dong , Prof. Di He, and was fortunate to work with Prof. Yue M. Lu from Harvard.

Feel free to contact me for research collaborations or other engagements. I am actively looking for full-time positions in industry for 2026.

Selected Publications

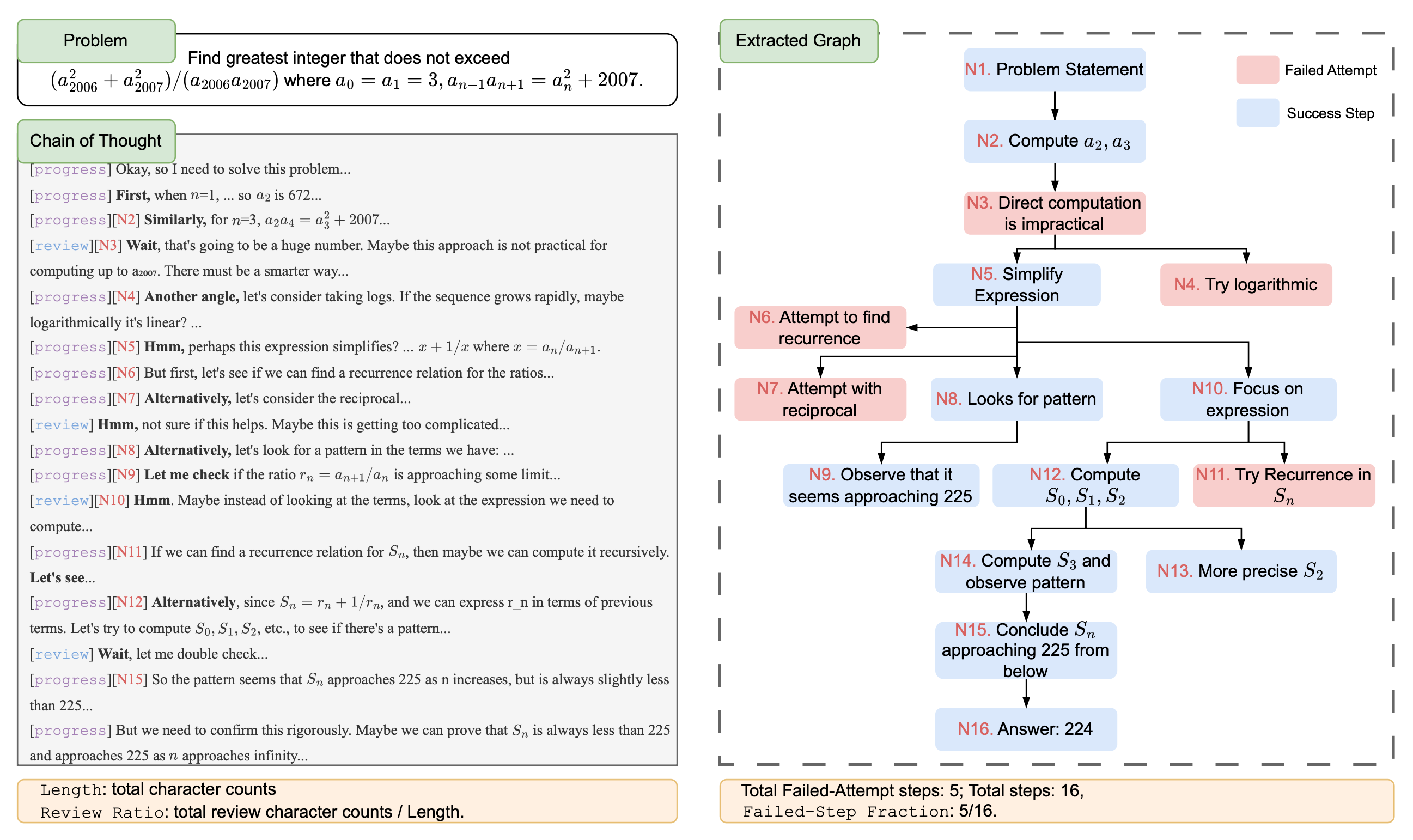

What Characterizes Effective Reasoning? Revisiting Length, Review, and Structure of CoT

Yunzhen Feng, Julia Kempe, Cheng Zhang, Parag Jain, Anthony Hartshorn.

NeurIPS Workshop on Efficient Reasoning , 2025, Spotlight.

[Paper]

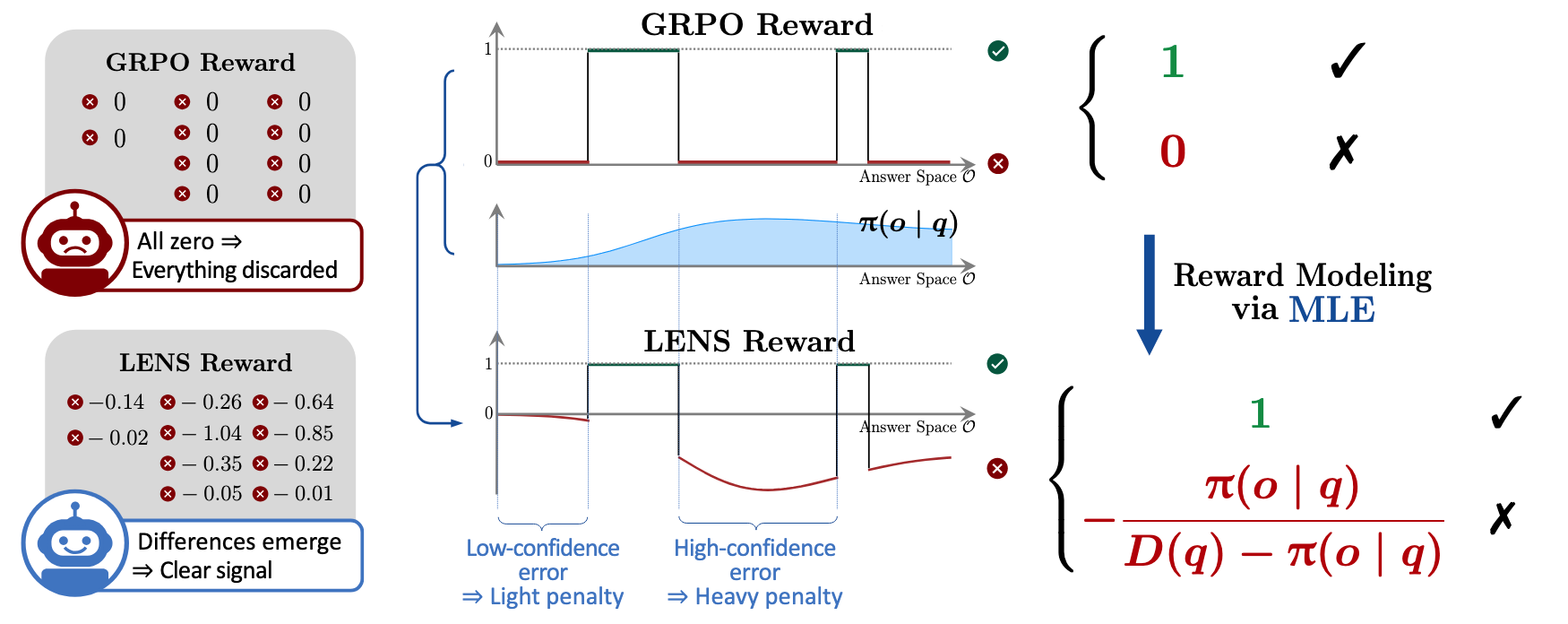

Don’t Waste Mistakes: Leveraging Negative RL-Groups via Confidence Reweighting

Yunzhen Feng, Parag Jain, Anthony Hartshorn, Julia Kempe, Yaqi Duan.

[Paper]

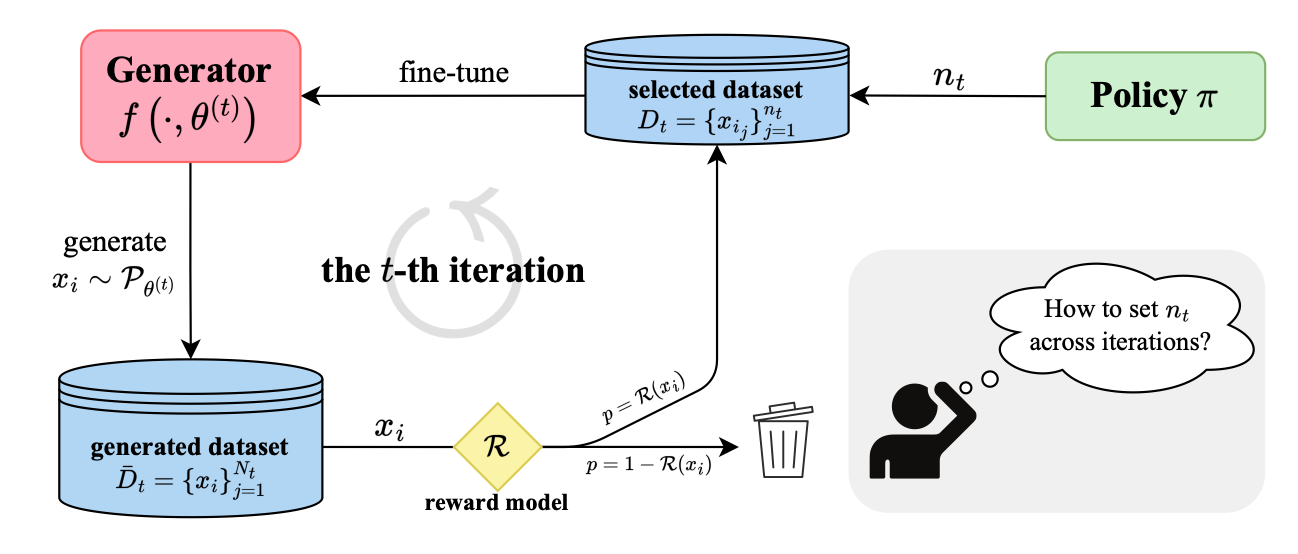

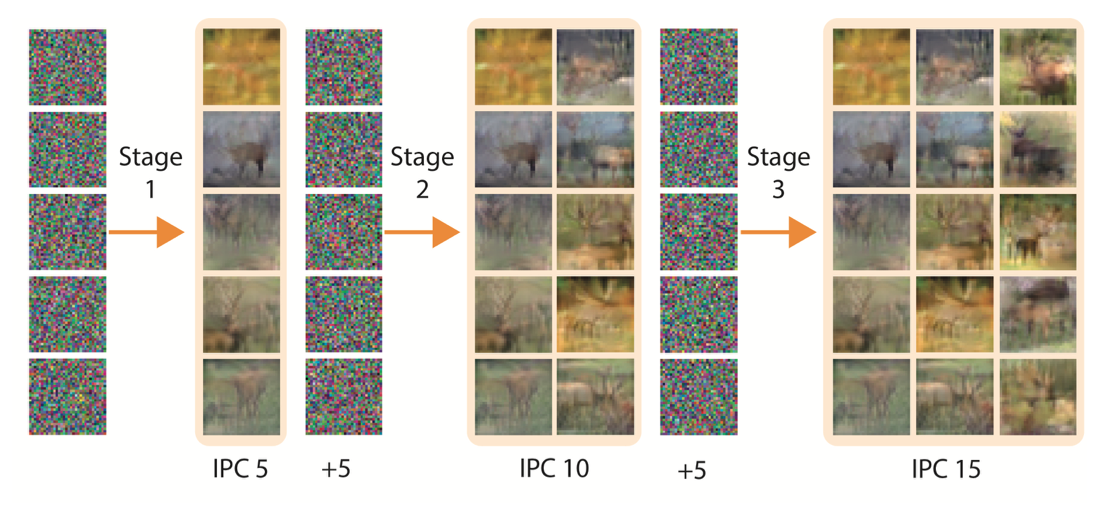

Spend Wisely: Maximizing Post-Training Gains in Iterative Synthetic Data Boostrapping

Pu Yang*, Yunzhen Feng*, Ziyuan Chen*, Yuhang Wu, Zhuoyuan Li.

Thirty-ninth Annual Conference on Neural Information Processing Systems (NeurIPS), 2025, Spotlight.

[Paper]

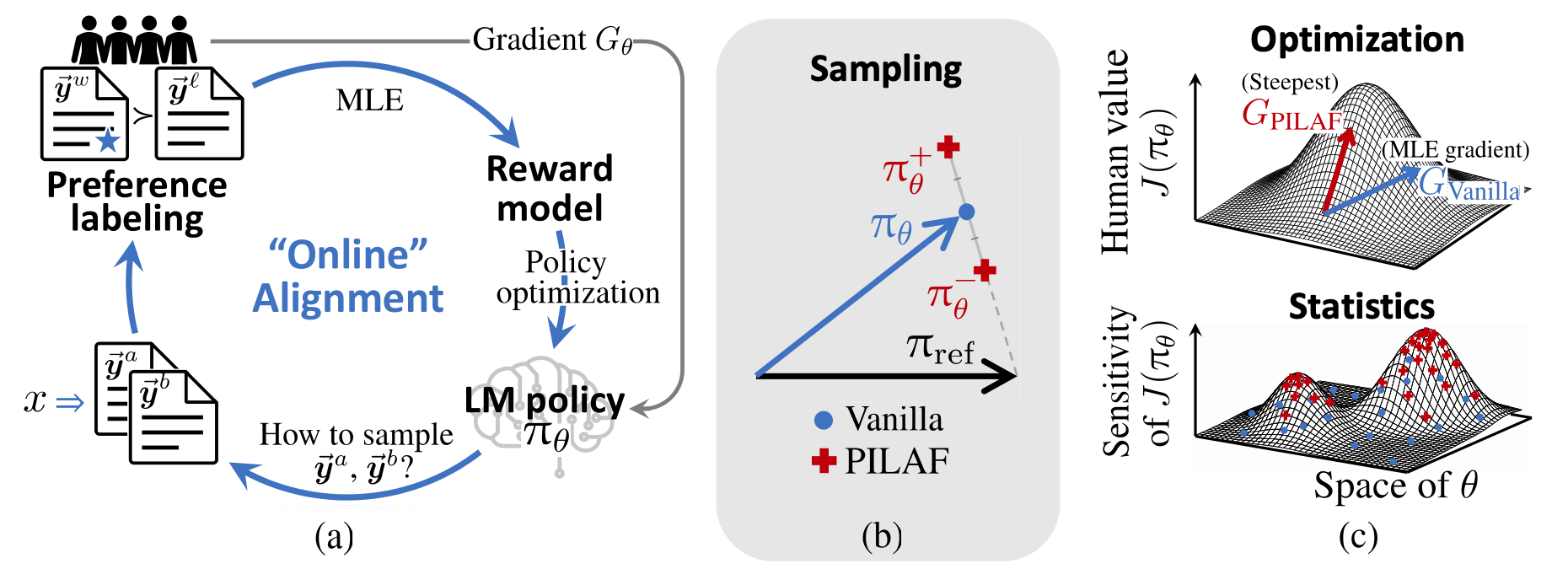

PILAF: Optimal Human Preference Sampling for Reward Modeling.

Yunzhen Feng, Ariel Kwiatkowski, Kunhao Zheng, Julia Kempe, Yaqi Duan.

International Conference on Machine Learning (ICML), 2025.

[Paper]

Strong Model Collapse.

Elvis Dohmatob, Yunzhen Feng, Arjun Subramonian, Julia Kempe.

International Conference on Learning Representations (ICLR), 2025, Spotlight.

[Paper]

Covered Media: [新智元] [机器之心] [German Radio Deutschlandfunk Series]

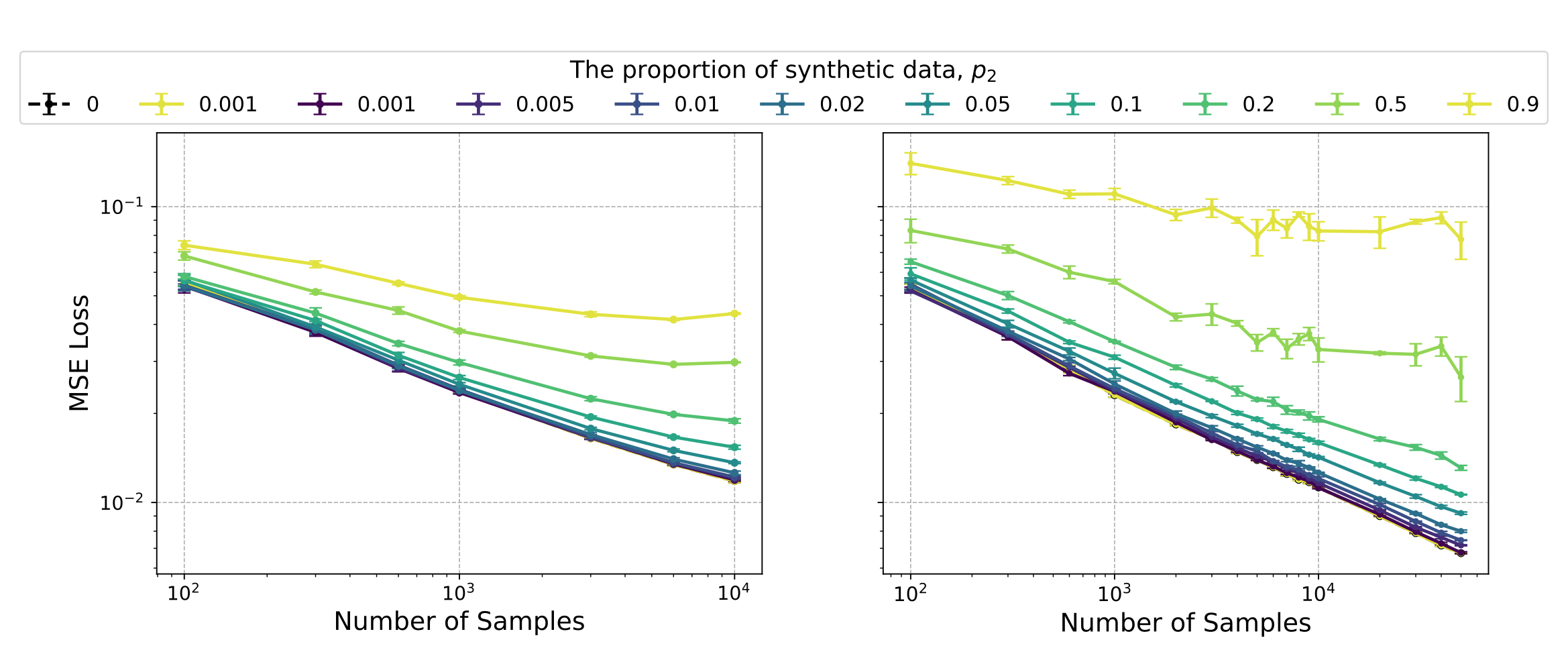

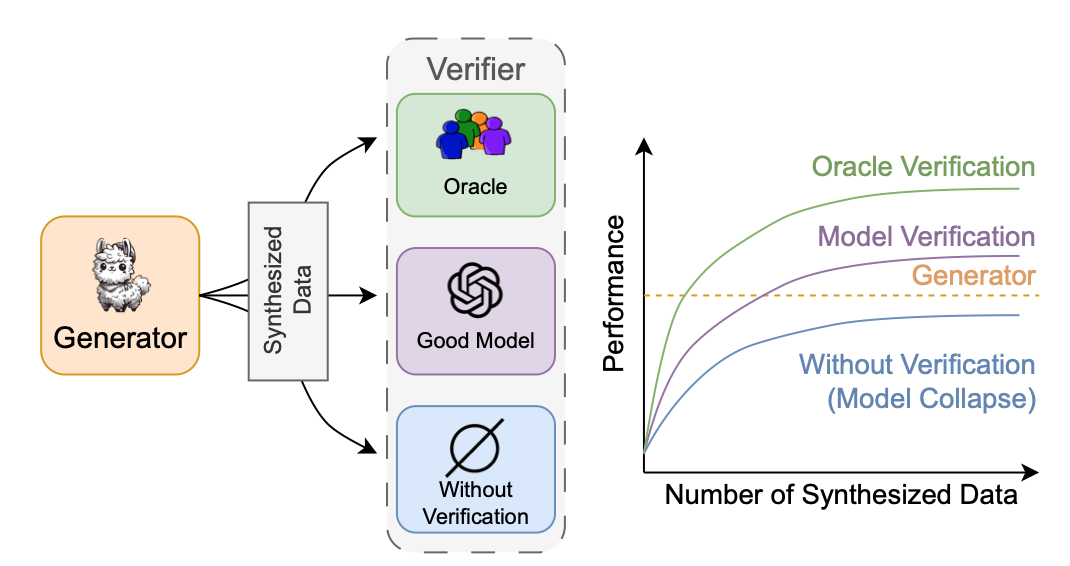

Beyond Model Collapse: Scaling Up with Synthesized Data Requires Verification.

Yunzhen Feng*, Elvis Dohmatob*, Pu Yang*, François Charton, Julia Kempe.

International Conference on Learning Representations (ICLR), 2025.

[Paper]

Covered Media: [Nature News] [Communications of the ACM] [Transformer Ai.] [The NYU Data Science News] [ MarkTechPost]

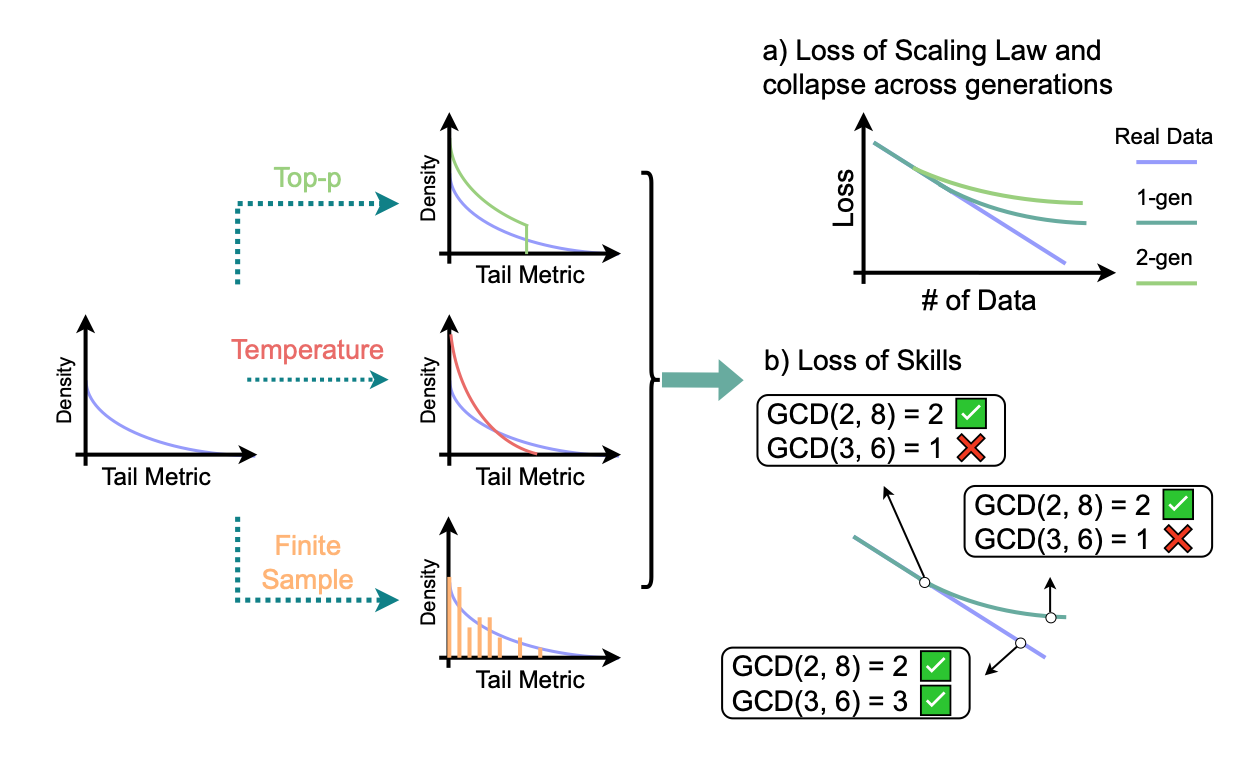

A Tale of Tails: Model Collapse as a Change of Scaling Laws

Yunzhen Feng*, Elvis Dohmatob*, Pu Yang, François Charton, Julia Kempe.

International Conference on Machine Learning (ICML), 2024.

[Paper]

Covered Media: [New York Times] [Les Echos] [The Register] [The Globe and Mail]

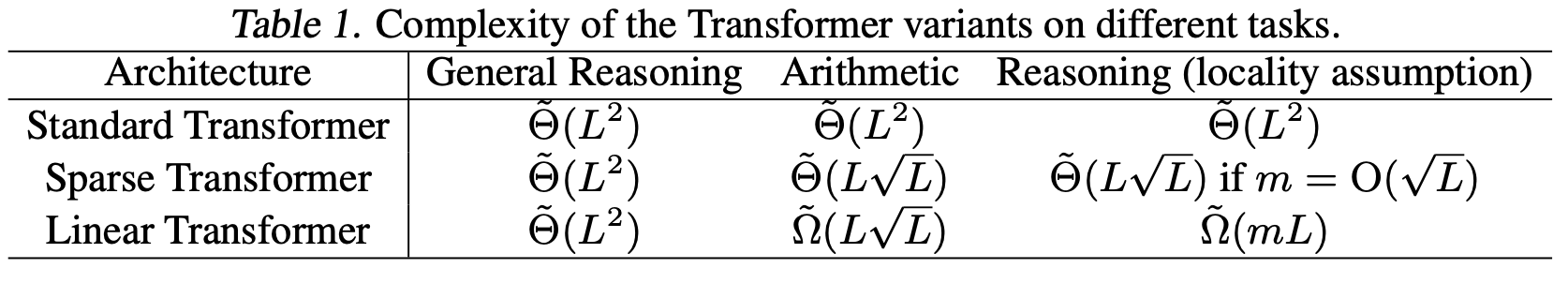

Do Efficient Transformers Really Save Computation?

Kai Yang, Jan Ackermann, Zhenyu He, Guhao Feng, Bohang Zhang, Yunzhen Feng, Qiwei Ye, Di He, Liwei Wang.

International Conference on Machine Learning (ICML), 2024.

[Paper]

Education

New York University, New York, NY. Candidate for Doctor of Philosophy in Data Science. Sep. 2021 – Summer 2026 (projected).

Peking University, Beijing, China. Bachelor of Science in Applied Mathematics (Honor Track). Sep. 2017 – Jul. 2021.

Miscellaneous

I am an outdoor lover. I climbed the Luodui Mount (6010m Snowberg) at Tibet, China in August, 2021. I have also served as the teaching assistant of *Outdoor Exploration* at Peking University. Outdoor experiences really help shape my personality and life methodology.